This is a very interesting problem, since the skill level at the professional level has clearly gone up. We’ve had similar ideas floating around the calculated.gg developer Discord for a while.

Then, recently, there was a reddit post on this topic on /r/RocketLeagueEsports:

The general consensus in the post was that they would be around Champ 1 or Champ 2. Is this correct?

No one in Season 1 was hitting ceiling shots or flip resets, and its even quite easy to see that the speed of play has increased dramatically. Since we have data all the way from Bronze 1 to 2000 MMR Grand Champion, we should be able to figure out where on that scale a given Season 1 replay sits. That’s what we’re going to talk about today. But first, lets step back and talk about how we would even quantify skill level in the first place.

Statistics

If you’ve used calculated.gg before, you know that we offer a range of stats relating to positioning, ball possession, boost, and team play for every game submitted. In the database, we have access to the skill level (rank/MMR) and the stats of each player in each replay. Therefore there’s an easy way to relate skill level to each stat. We can simply place rank on the X axis, and the mean stat for that rank on the Y axis. This gives us easy-to-read graphs such as this one:

We even tweeted about it back in October 2018, with a link to an album of all the stats at that time compared to ranks. At the time, we were lacking in bronze-gold, and that is still somewhat true today. Luckily, that most likely won’t be an issue when ranking Season 1 games. Surprising, right?

Some of these graphs are obvious. Like duh, GCs hit more aerials than Platinums. But it’s still cool to see the intuitions that most players have about skill shown in real data. There’s also some interesting correlations there that may not be obvious. For instance, shot % goes down slightly as skill levels goes up. This may be skewed a bit by the in-game shot detection, but players at low ranks have issues saving shots, even if they’re from similarly skilled players. On the other hand, Champion level players have an easy time saving even the craziest shots that their peers can put on net.

I won’t go too much more into the data right now. We’ll touch on this dataset and these visualizations more in another blog post.

Quantifying Skill Level

We have a list of stats from any given game, and we have the skill level of that game. This seems very similar to a standard machine learning regression problem.

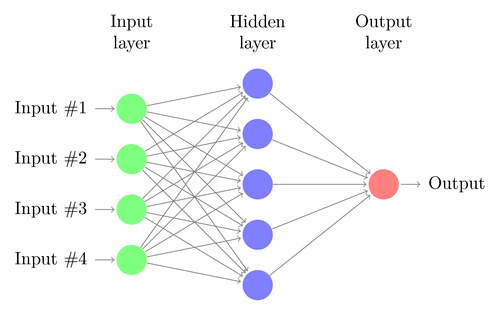

Given a set of features X (in this case, stats from a game), and an output value y (in this case, rank in integer form), can we effectively predict the output value given just the inputs?

$$X = \begin{bmatrix} x_{1} \\ x_{2} \\ \vdots \\ x_{m} \end{bmatrix}$$A standard neural network will work for this, with an input for each stat, and a single output neuron:

Now this turned out to be an oversimplification of the problem. We have over 100 stats in the database, and this proved to be too much for a single network to handle reasonably. We ended up going with an ensemble method, which is a revision of a classification problem. There is one network per skill level and the networks are trained to tell if the given skill level is at or above their current level. If that’s true, they classify it as class “1”. If it’s below their skill level, it is classified as class “0”. To detect the skill level, we simply have to find the last network that outputs class “1” during runtime, and we have our skill level.

We trained these networks on 20k games in only the last 3 months of each skill level from level 10 (Platinum 1) to level 19 (Grand Champion), in ranked standard. We had to use ranked standard since we (obviously) can’t rank private games and it’s the closest to actual RLCS matches.

After training, the accuracy looks like this:

The “rank difference” is how accurate the model is at predicting within X ranks. So within 3 ranks would be guessing Champ level or higher for a single Grand Champion game. Keep in mind that this is any given game. So even if the player did not perform at their skill level, we are trying to guess it properly.

Later models will try to predict based on an average for a player. So, given 10-15 games from a single player, can we do a similar prediction? The data says (probably) yes, and it will be more accurate. However it will be more difficult to show, since it is not tied to one single game but multiple series.

Results

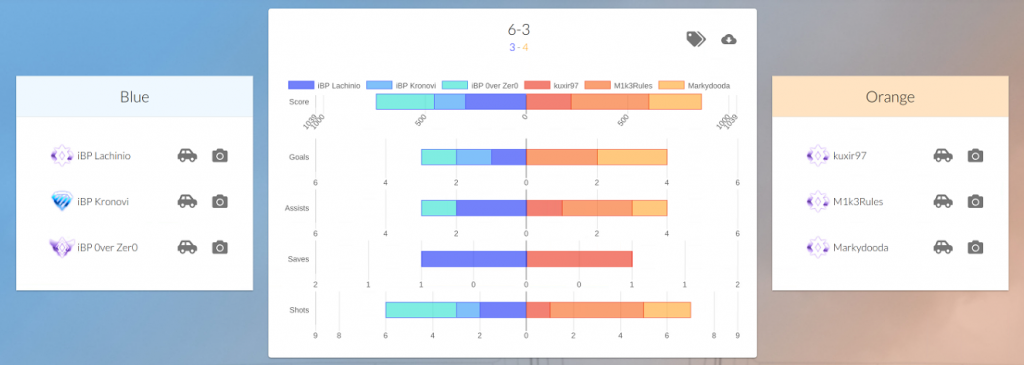

So now that we have models for ranking games, can we predict the rank of a RLCS S1 game? First, we should verify that we are even getting decent results on a recent RLCS game. If the models don’t predict high champ/GC, we won’t be getting results that are even close to being accurate.

It seems to be near accurate. Not all of the games were as perfect as this one, obviously, but in general they predicted high Champ 3 to GC for all players.

Now let’s run this on a S1 game from the finals:

As you can see, the game is predicted to be around Diamond 3-Champ 2 level, and the other games in the series were predicted to be similar.

| Game | Kronovi | 0ver Zer0 | Lachinio | kuxir97 | Marky | M1K3 | Average |

| 1 | 19 | 17 | 15 | 18 | 18 | 19 | 17.7 |

| 2 | 15 | 18 | 15 | 18 | 18 | 15 | 16.5 |

| 3 | 15 | 18 | 17 | 17 | 17 | 17 | 16.8 |

| 4 | 17 | 17 | 17 | 18 | 13 | 15 | 16.2 |

| 5 | 15 | 18 | 17 | 18 | 15 | 19 | 17.0 |

| 6 | 17 | 17 | 17 | 18 | 14 | 18 | 16.8 |

| Average | 16.3 | 17.5 | 16.3 | 17.8 | 15.8 | 17.2 | 16.8 (Champ 1-2) |

That might seem low, but I suggest you take a look for yourself. Even though the playstyle is slow and messy, just like Champ 1, they’re not going to be equivalent. General technical skill is low (no fast aerials or ceiling touches), but there are still team plays that aren’t going to be found in Champ 1 or 2. That is a result of these teams playing together, professionally, for a significant amount of time. Even without the speed and tech from recent games, they would still be a force to be reckoned with in ranked.

Going Forward

Now that we have these models trained, can we do this type of detection for any game that is submitted to us? The answer is yes, we can. These models are going to be deployed onto the main calculated.gg website in the coming weeks so that it will work with any game you submit.

Keep in mind that these models are based on a single game performance, so it’s going to be very difficult to predict every player’s skill in every game correctly. Nevertheless, the models perform well enough. If you consider the mean predicted skill level for a match, you can usually find that the average skill level predicted is around the real skill level, at least for matches within the last 3 months.